Azure Blob Storage: Managing Unstructured Data

Managing large amounts of unstructured data effectively and affordably is a challenge. In this guide, we will explore Azure Blob Storage, a robust solution for storing everything from media files to backups. By detailing various features like access tiers, lifecycle management, object replication, and pricing, we'll explore how Azure Blob Storage can be tailored to meet the unique needs of a company.

Implement Azure Blob Storage

Azure Blob Storage is a service that stores unstructured data in the cloud as objects or blobs. Blob stands for Binary Largy Object. It's also refered to as object storage or container storage.

Things to know

- Blob Storage can store any type of text or binary data, e.g. text docs, images, video files, and application installers.

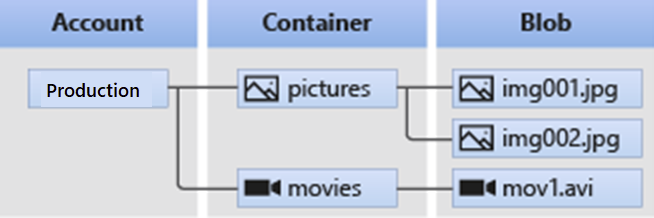

- Uses three resources to store and manage data:

- Azure Storage account.

- Containers in the storage account.

- Blobs in the container.

- To implement Blob Storage, you configure several settings:

- Blob container options.

- Blob types and upload options.

- Blob Storage access tiers.

- Blob lifecylce rules.

- Blob object replication options.

This diagram shows the relationship between the Blob Storage resource:

Create blob containers

Azure Blob Storage uses a container resource to group a set of blobs. A blob can't exist by itself in Blob Storage. A blob must be stored in a container resource.

Things to know

- All blobs must be in a container.

- A container can store an unlimited number of blobs.

- A Azure storage account can contain an unlimited number of containers.

Configure a container

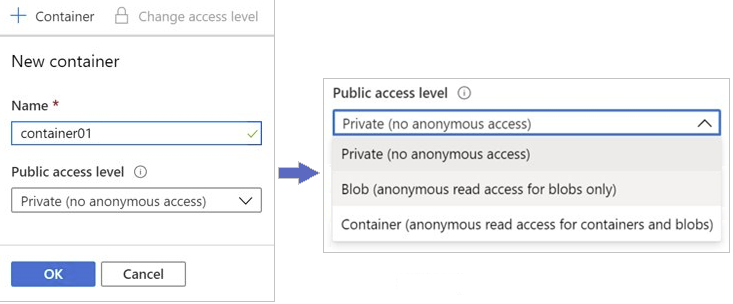

- Public access level: Specifies whether the container and its blobs can be accessed publicly. By default, container data is private, only visible to account owner.

- Private: Prohibit anonymous access to the container and blobs.

- Blob: Allow anonymous piblic read access for the blobs only.

- Container: Allow anonymous public read and list access to the entire container, including the blobs.

New-AzStorageContaineris the Azure Powershell command to create a new container.

Blob Access tiers

- Hot tier: Optimized for storing data that is accessed frequently. Lowest access cost, but higher storage cost.

- Cool tier: Optimized for storing data that is infrequently accessed and stored for at least 30 days. E.g. short-term backup and disaster recovery datasets and older media content. Accessing data in the Cool tier can be more expensive than accessing data in the Hot tier.

- Archive tier: Optimized for storing data that is rarely accessed and stored for at least 180 days. E.g. long-term backup, secondary backup copies, and archival datasets. Most cost-effective option for storing data. Accessing data is more expensive than accessing data in the other tiers.

| Compare | Hot tier | Cool tier | Archive tier |

|---|---|---|---|

| Availability | 99.9% | 99% | Offline |

| Availability (RA-GRS reads) | 99.99% | 99.9% | Offline |

| Latency (time to first byte) | milliseconds | milliseconds | hours |

| Minimum storage duration | N/A | 30 days | 180 days |

| Usage costs | Higher storage costs, Lower access & transaction costs | Lower storage costs, Higher access & transaction costs | Lowest storage costs, Highest access & transaction costs |

Blob Lifecycle management

- Data Lifecycle: Data sets have unique lifecycles, with varying access levels over time. Some data may become idle or expire, while others are actively read or modified.

- Azure Blob Storage Lifecycle Management: Azure offers rule-based policies for managing the lifecycle of data in GPv2 and Blob Storage accounts.

- Tasks You Can Accomplish:

- Transition blobs between storage tiers (Hot to Cool, Hot to Archive, Cool to Archive) to balance performance and cost.

- Delete blobs at the end of their lifecycle.

- Define daily rule-based conditions at the storage account level.

- Apply rules to specific containers or subsets of blobs.

- Business Scenario Example: For data that's frequently accessed early on but rarely after a month, lifecycle rules can move data from Hot to Cool to Archive tiers to optimize costs and accessibility.

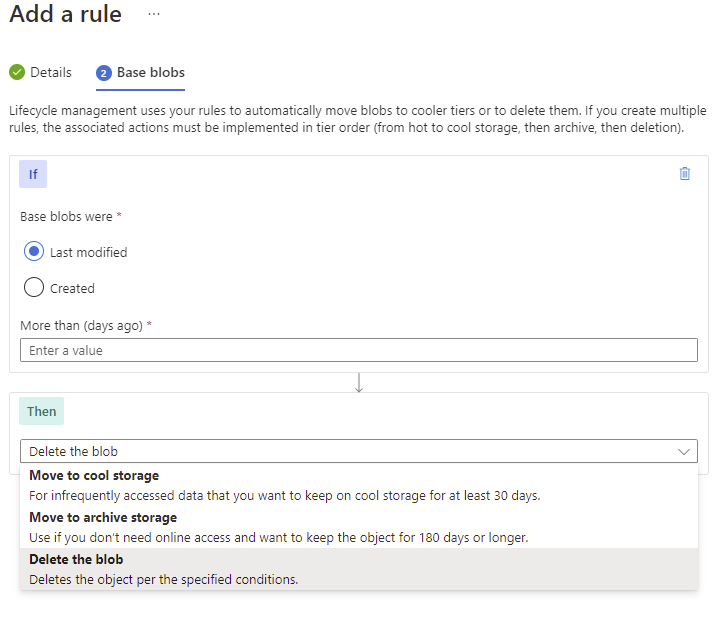

Configure lifecycle management policy rules

These rules in the Azure portal enable custom handling of data over its lifecycle in Azure storage accounts.

- Creating Rules: You use If - Then block conditions to transition or expire data based on specifications.

- If Clause: This is the evaluation part where you set the time period for the blob data. It checks if the data is accessed or modified according to a specified time (e.g., "More than (days ago)").

- Then Clause: This is the action part, where you set what happens if the "If" condition is met. Actions include:

- Move to Cool Storage: Transition the data to Cool tier storage.

- Move to Archive Storage: Transition the data to Archive tier storage.

- Delete the Blob: Delete the data entirely.

- Goal: By setting these rules, you can ensure that your data is stored in the most cost-effective way, moving it between storage tiers or deleting it as it ages.

Blob Object Replication

- What It Is: Asynchronous copying of blobs in a container according to configured policy rules, including the blob's contents, metadata, properties, and associated versions.

- Requirements:

- Blob versioning must be enabled on both source and destination accounts.

- Supported only when both source and destination accounts are in the Hot or Cool tier (can be different tiers).

- Does not support blob snapshots; they won't be replicated.

- Configuration:

- You create a replication policy specifying the source and destination storage accounts.

- Includes rules specifying source and destination containers, identifying which blobs to replicate.

- Benefits:

- Latency Reduction: Minimizes latency by enabling data access from a closer region.

- Efficiency for Compute Workloads: Improves workload efficiency by processing the same blobs in different regions.

- Data Distribution Optimization: Allows processing or analysis of data in one location and replication of the results to other regions.

- Cost Benefits: Can manage and optimize storage policies for cost-saving, including moving data to the Archive tier using lifecycle management policies.

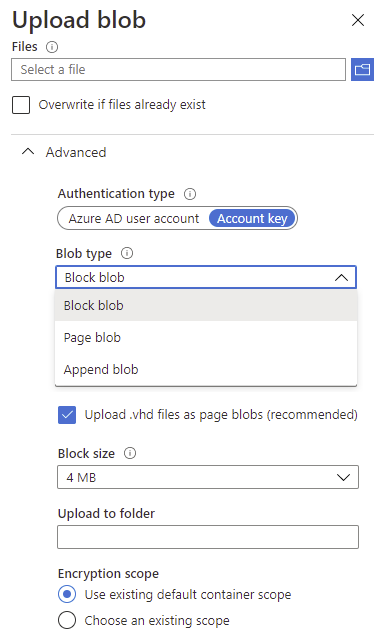

Upload blobs

- Blob Types:

- Block Blobs: Made of data blocks, mainly used for text, files, images, videos.

- Append Blobs: Similar to block blobs but optimized for appending. Useful for logging.

- Page Blobs: Up to 8 TB, efficient for frequent read/write. Used in Azure VMs for OS and data disks.

- Note: The default type for a new blob is a block blob, and you cannot change a blob's type after creation.

- Blob Upload Tools:

- AzCopy: Command-line tool for copying data to/from Blob Storage across containers/accounts.

- Azure Data Box Disk: For transferring large or constrained network datasets on-premises to Blob Storage using physical disks.

- Azure Import/Export: Exports large amounts of data to your hard drives that Microsoft ships back to you.

The following example shows how to upload blob data in Azure Storage Explorer. After you identify the files to upload, you choose the blob type and block size, and the container folder. You also set the authentication method and encryption scope.

Pricing for Azure Blob Storage

- Performance Tiers: Cost varies by tier, with cooler tiers being less expensive per gigabyte for storage.

- Data Access Costs: Increases as the tier gets cooler; charges apply per gigabyte for reads in Cool and Archive tiers.

- Transaction Costs: Applies to all tiers, with increased charges as the tier gets cooler.

- Geo-replication Data Transfer Costs: Applies only to accounts with geo-replication like GRS and RA-GRS, billed per gigabyte.

- Outbound Data Transfer Costs: Charged for data transferred out of an Azure region, based on bandwidth usage per gigabyte.

- Changes to the Storage Tier:

- Changing from Cool to Hot: Incurs a charge equivalent to reading all existing data.

- Changing from Hot to Cool: Incurs a charge equivalent to writing all data into the Cool tier (for GPv2 accounts).

Azure Blob Storage offers a dynamic and cost-effective solution for managing unstructured data across various industry needs. By leveraging its features, businesses can tailor their data storage and access strategies to meet evolving requirements.